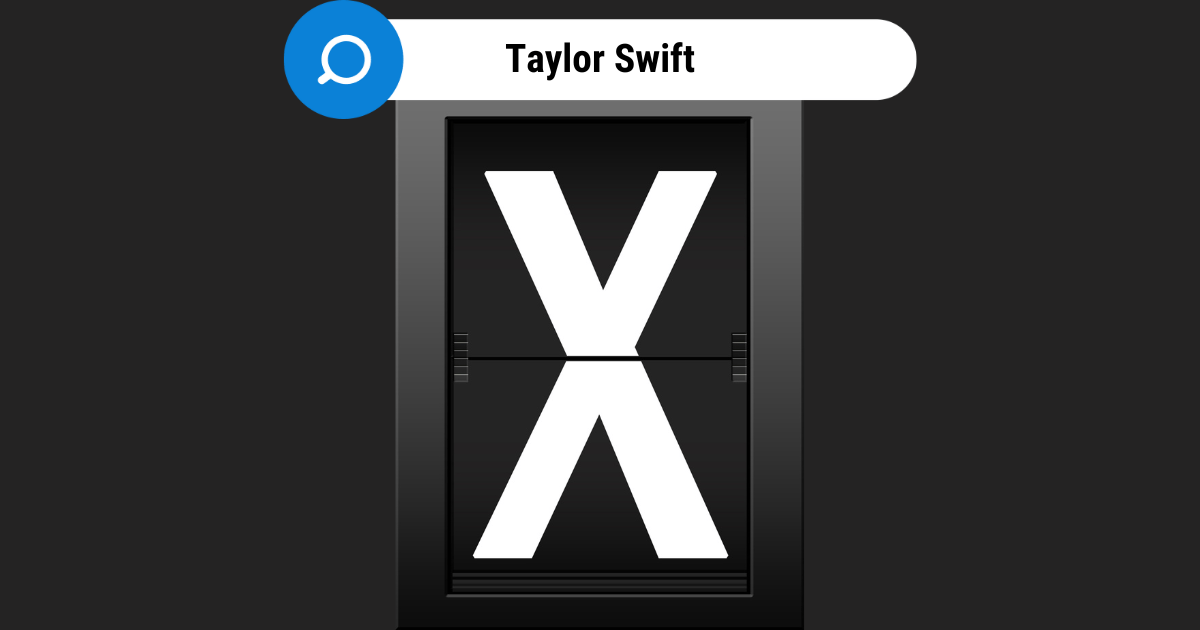

You Cant Search Taylor Swift on X

X (formerly known as Twitter) has confirmed that it temporarily blocked searches for Taylor Swift’s name on its platform. This decision was made to prioritize user safety after nonconsensual pornographic deepfakes of the singer went viral.

The issue began when explicit, AI-generated images of Taylor Swift started circulating on X. These images, which were created without Swift’s consent, quickly went viral, leading to widespread concern and criticism. Fans of Swift took action by reporting accounts that shared the images and flooding related hashtags with positive content to limit their visibility.

X’s Response

X’s head of business operations, Joe Benarroch, stated, “This is a temporary action and done with an abundance of caution as we prioritize safety on this issue.” The platform’s teams actively worked to remove the identified images and took appropriate actions against the accounts responsible for posting them.

Investigations traced the likely origin of the images to a Telegram group known for creating nonconsensual AI-generated images of women. The group reportedly used free tools, including Microsoft Designer, to create these images.

This incident highlights the challenges social media platforms face in curbing the spread of nonconsensual, sexually explicit images, especially those generated by AI. While some AI image generators have restrictions to prevent such content, many others do not offer explicit safeguards.

X’s decision to block searches for Taylor Swift’s name underscores the complexities of managing harmful content on social media platforms. As AI technology continues to evolve, the need for effective measures to protect individuals’ rights and prevent the spread of nonconsensual content becomes increasingly crucial.